I wrote a post few weeks back that my MySQL NDB cluster was already running. This is a follow-up post on how I did it.

Before I dug in, I read some articles first on the best practices for MySQL Cluster installations. One of the sources that I’ve read is this quite helpful presentation.

The plan was to setup the cluster with 6 components:

- 2 Management nodes

- 2 MySQL nodes

- 2 NDB nodes

Based on the best practices, I only need 4 servers to accomplish this setup. With these tips in mind, this is the plan that I came up with:

- 2 VMs (2 CPUs, 4GB RAM, 20GB drives ) – will serve as MGM nodes and MySQL servers

- 2 Supermicro 1Us (4-core, 8GB RAM, RAID 5 of 4 140GB 10k rpm SAS) – will serve as NDB nodes

- all servers will be installed with a minimal installation of CentOS 6.2

The servers will use these IP configuration

- mm0 – 192.168.1.162 (MGM + MySQL)

- mm1 – 192.168.1.211 (MGM + MySQL)

- lbindb1 – 192.168.1.164 (NDB node)

- lbindb2 – 192.168.1.163 (NDB node)

That’s the plan, now to execute…

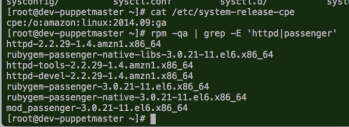

I downloaded the binary packages from this mirror. If you want a different mirror, you can choose from the main download page. I only need these two:

To install the packages, I ran these commands in the respective servers

mm0> rpm -Uhv --force MySQL-Cluster-server-gpl-7.2.5-1.el6.x86_64.rpm

mm0> mkdir /var/lib/mysql-cluster

mm1> rpm -Uhv --force MySQL-Cluster-server-gpl-7.2.5-1.el6.x86_64.rpm

mm1> mkdir /var/lib/mysql-cluster

lbindb1> rpm -Uhv --force MySQL-Cluster-server-gpl-7.2.5-1.el6.x86_64.rpm

lbindb1> mkdir -p /var/lib/mysql-cluster/data

lbindb2> rpm -Uhv --force MySQL-Cluster-server-gpl-7.2.5-1.el6.x86_64.rpm

lbindb2> mkdir -p /var/lib/mysql-cluster/data

The mkdir commands will make sense in a bit…

My cluster uses these two configuration files:

/etc/my.cnf – used in the NDB nodes and MySQL servers (both mm[01] and lbindb[01])/var/lib/mysql-cluster/config.ini – used in the MGM nodes only (mm[01])

Contents of /etc/my.cnf:

[mysqld]

# Options for mysqld process:

ndbcluster # run NDB storage engine

ndb-connectstring=192.168.1.162,192.168.1.211 # location of management server

[mysql_cluster]

# Options for ndbd process:

ndb-connectstring=192.168.1.162,192.168.1.211 # location of management server

Contents of /var/lib/mysql-cluster/config.ini:

[ndbd default]

# Options affecting ndbd processes on all data nodes:

NoOfReplicas=2 # Setting this to 1 for now, 3 ndb nodes

DataMemory=1024M # How much memory to allocate for data storage

IndexMemory=512M

DiskPageBufferMemory=1048M

SharedGlobalMemory=384M

MaxNoOfExecutionThreads=4

RedoBuffer=32M

FragmentLogFileSize=256M

NoOfFragmentLogFiles=6

[ndb_mgmd]

# Management process options:

NodeId=1

HostName=192.168.1.162 # Hostname or IP address of MGM node

DataDir=/var/lib/mysql-cluster # Directory for MGM node log files

[ndb_mgmd]

# Management process options:

NodeId=2

HostName=192.168.1.211 # Hostname or IP address of MGM node

DataDir=/var/lib/mysql-cluster # Directory for MGM node log files

[ndbd]

# lbindb1

HostName=192.168.1.164 # Hostname or IP address

DataDir=/var/lib/mysql-cluster/data # Directory for this data node's data files

[ndbd]

# lbindb2

HostName=192.168.1.163 # Hostname or IP address

DataDir=/var/lib/mysql-cluster/data # Directory for this data node's data files

# SQL nodes

[mysqld]

HostName=192.168.1.162

[mysqld]

HostName=192.168.1.211

Once the configuration files are in place, I started the cluster with these commands (NOTE: Make sure that the firewall was properly configured first):

mm0> ndb_mgmd --ndb-nodeid=1 -f /var/lib/mysql-cluster/config.ini

mm0> service mysql start

mm1> ndb_mgmd --ndb-nodeid=2 -f /var/lib/mysql-cluster/config.ini

mm1> service mysql start

lbindb1> ndbmtd

lbindb2> ndbmtd

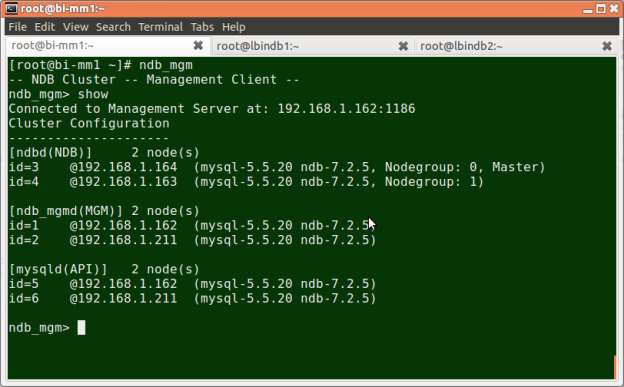

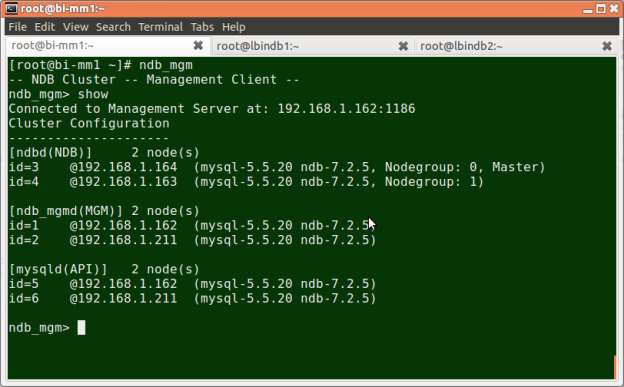

To verify if my cluster is really running, I logged-in into one of the MGM nodes and ran ndb_mgm like this:

I was able to set it this up a few weeks back. Unfortunately, I haven’t had the chance to really test it with our ETL scripts… I was occupied with other responsibilities…

Thinking about it now, I may have to scrap the whole cluster and install a MySQL with InnoDB + lots of RAM! hmmm… Maybe I’ll benchmark it first…

Oh well… 🙂

References: